Botrista: Robot Barista

ProjectThis project uses the Franka Emika Panda arm to brew a cup of pour over coffee. It uses Intel RealSense and AprilTags to detect the positions of objects and determine how to grasp the object, and it uses Moveit2 to plan the trajectories. This project was completed over a course of 3 weeks.

Video Demo

Cup Detection

The process of coffee making begins with a cup being placed in the cup holder. The cup is detected using color detecting and the location is estimated using a Hough circle and the RealSense depth image to determine the transform of the cup. This transform is used to pout the cup of coffee once it is made from the coffee decanter.

Moveit Wrapper

For this project we use a custom wrapper for the Moveit2 API. The purpose of this wrapper is to make implentation easier - it automatically fills in many of the message fields, and offers a simpler way of planning trajectories. Some of the notable features of this wrapper are:

- A Grasp Planner

- Sets up the ability to set the weight of the object

- Has a position at which it approachs, grasps and then retreats from an object base location

- Planning

- Plans a path to a position and orientation and executes the planning based on constraints on the motion if any

- Cartesian Trajectory

- Plans a catersian path based on a number of waypoints passed to it

- Plans a catersian path based on a number of waypoints passed to it

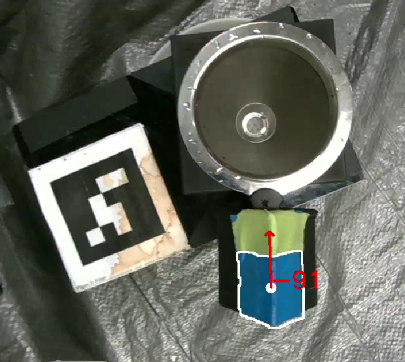

AprilTags

AprilTags were used for localization. A RealSense d435i was mounted on the ceiling so that it could see all of the AprilTags and locate the rough positions of each station in relation to the large central AprilTag. The following AprilTags were used:

- One large AprilTag on the table

- This was used to localize the camera and the main point of relation between the Franka arm and the other AprilTags

- One AprilTag per station

- The stations include an AprilTag and a stand that were all fixed onto an acryllic sheet

- These allowed the robot to see the general location of each object

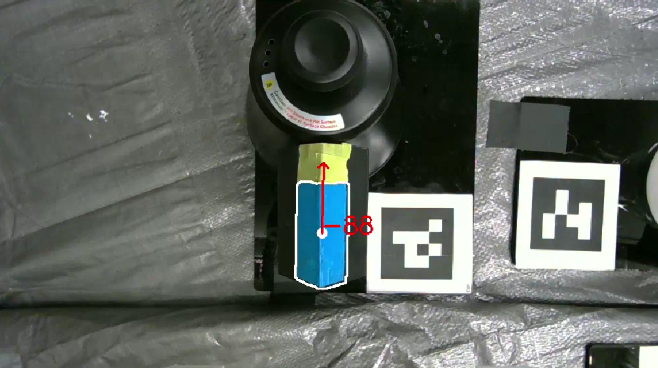

Handle Detection

Using the RealSense d405i camera, we detected the handles of the objects using the blue tape and green tape to localizer the handle position to orient the gripper into the right position to pick up the object.

Grasping

Using the MoveIt wrapper, we set up the grasping of objects as 4 steps.

- Observe Pose: the pose is set aabove the AprilTag of the station, this is setup to find the handle of the object

- Approach Pose: the pose set above the handle for grasping

- Grasp Pose: the pose on the handle to grasp the handle

- Retreat Pose: the pose of the gripper, which is now holding the object, above the station ready to move to the next stage

Source code: GitHub

Group Members: Stephen Ferro, Carter DiOrio, Anuj Natraj, Kyle Wang, Jihai Zhao